Face Tracking

Face tracking technology allows extended reality (XR) headsets to capture the movements and expressions of a participant's face. This is useful for capturing participant's facial reactions, such as when a participant raises their eyebrows, drops their jaw, closes their eyes or frowns. This data can then be used to make conclusions about various emotions or reactions a participant might of had during the ![]() Experience.

Experience.

How Face Tracking Works

- Built-in Face Tracking: Some Extended Reality (XR) headsets come with built-in face tracking capabilities, utilizing internal sensors and cameras to capture facial movements.

- Additional Trackers: Other headsets may require purchasing additional trackers or accessories to capture detailed face tracking data.

Example: Face tracking on Meta Quest Pro

The Meta Quest Pro has inward-facing sensors that can detect the participant's facial movements and expressions. Here is how it works:

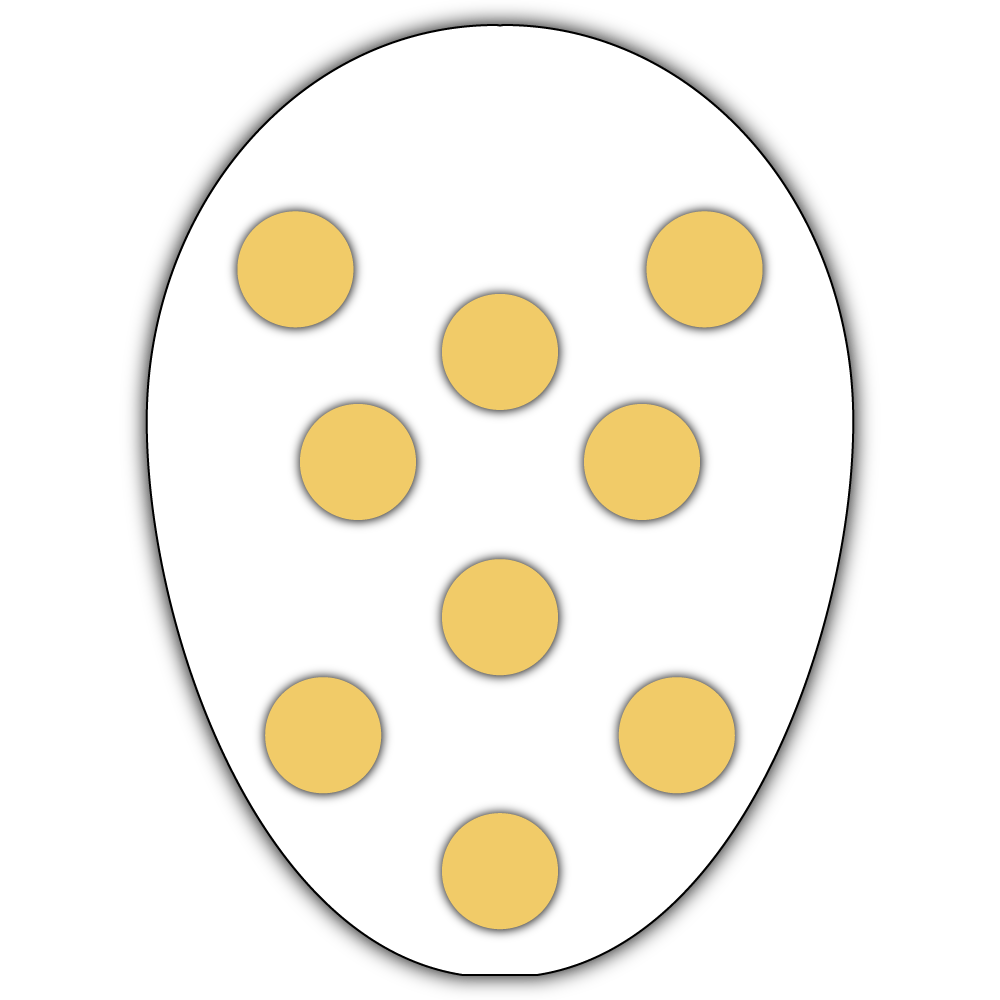

If face tracking is enabled, the headset will analyze images of the participant's face in real time to produce a set of numbers that represent estimates of their gaze's direction and facial movements. The Meta Quest Pro captures up to 64 different points on the participant's face. You can visualize them all of them from the Data Capture window in ![]() .

.